Text Mining in Academic Research

: Methods, Applications, and Challenges

Invited Talk @서강대학교 메타버스전문대학원

2023-05-09

Changjun Lee

Hanyang University

Dep. Media & Social Informatics

About me

Computational Social Scientist

Network Scientist

Interdisciplinary Scholar

My research focuses on utilizing computational methods to tackle a wide range of social phenomena, including technology evolution & regional growth, knowledge management, and technology & media innovation. I am passionate about using technology and data to drive innovation and solve real-world problems.

Research

#Innovation#Media#Technology#PublicPolicyTeaching

#DataScience#culture&tech

Introduction

Importance of Text Mining in Academic Research

Growth of digital text data: Exponential increase in research publications, conference proceedings, and digital repositories.

Time-saving: Text mining techniques automate analysis, reducing manual labor and time-consuming tasks.

Uncovering hidden patterns: Detects patterns, trends, and relationships in large datasets that are not easily done through manual analysis.

Enhancing interdisciplinary research: Facilitates collaboration and knowledge transfer between different research fields by discovering connections and insights across disciplines.

Importance in Media Studies: Analyzing vast amounts of media content (news articles, social media posts, multimedia transcripts) to understand public opinion, sentiment, and the impact of media on society. Text mining enables efficient examination of media biases, framing, and agenda-setting, as well as tracking emerging trends and topics.

Introduction

Introduction

Introduction

Unlocking the Power of Text Data

Unveil valuable insights and hidden patterns

Harness natural language processing (

NLP), machine learning (ML), and statistical techniques

Text Mining: A Synergy of Techniques

Data Collection: Gathering textual data from diverse sources

Pre-processing: Cleaning and transforming raw text for analysis

Analysis: Employing NLP, machine learning, and statistical methods to uncover patterns

Interpretation: Making sense of the results and deriving actionable insights

Visualization: Effectively presenting findings through engaging visual aids

Overview of the talk

🛠️ Text Mining Techniques

- Discovering powerful tools and methodologies

🎓 Applications in Academic Research

- Exploring the impact of text mining across disciplines

⚠️ Challenges and Limitations

- Navigating the hurdles and constraints

🚀 Future Directions and Conclusion

- Envisioning the evolving landscape of text mining

Text Mining Techniques

A. Pre-processing: The foundation for accurate analysis (70% time & labour)

B. Feature Extraction: Transforming text into meaningful representations

C. Text Classification: Categorizing documents based on content

D. Text Clustering: Grouping similar documents together

E. Sentiment Analysis: Decoding emotions and opinions in text

F. Named Entity Recognition: Identifying and classifying entities in text

G. Relation Extraction: Discovering relationships between entities

Text Mining Techniques

Pre-processing

Tokenization

Splitting text into individual words or tokens

text <- "Text mining is an important technique in academic research."

text_df <- tibble(line = 1, text = text)

text_df# A tibble: 1 × 2

line text

<dbl> <chr>

1 1 Text mining is an important technique in academic research.# A tibble: 9 × 2

line word

<dbl> <chr>

1 1 text

2 1 mining

3 1 is

4 1 an

5 1 important

6 1 technique

7 1 in

8 1 academic

9 1 research Text Mining Techniques

Pre-processing

Stop word removal

Removing common words that do not contribute to meaning

# A tibble: 1,149 × 2

word lexicon

<chr> <chr>

1 a SMART

2 a's SMART

3 able SMART

4 about SMART

5 above SMART

6 according SMART

7 accordingly SMART

8 across SMART

9 actually SMART

10 after SMART

# ℹ 1,139 more rows# A tibble: 5 × 2

line word

<dbl> <chr>

1 1 text

2 1 mining

3 1 technique

4 1 academic

5 1 research Text Mining Techniques

Pre-processing

Stemming and Lemmatization

Reducing words to their root or base form

한글에서는 형태소 분석 (명사, 동사, 형용사 등으로 분해)

Text Mining Techniques

Feature Extraction

Bag of Words

Creating a document-term matrix with word frequencies

texts <- c("Text mining is important in academic research.",

"Feature extraction is a crucial step in text mining.",

"Cats and dogs are popular pets.",

"Elephants are large animals.",

"Whales are mammals that live in the ocean.")

text_df <- tibble(doc_id = 1:length(texts), text = texts)

text_df# A tibble: 5 × 2

doc_id text

<int> <chr>

1 1 Text mining is important in academic research.

2 2 Feature extraction is a crucial step in text mining.

3 3 Cats and dogs are popular pets.

4 4 Elephants are large animals.

5 5 Whales are mammals that live in the ocean. # A tibble: 34 × 2

doc_id word

<int> <chr>

1 1 text

2 1 mining

3 1 is

4 1 important

5 1 in

6 1 academic

7 1 research

8 2 feature

9 2 extraction

10 2 is

# ℹ 24 more rows# Create a Bag of Words representation

bow <- tokens %>%

count(doc_id, word) %>%

spread(key = word, value = n, fill = 0)

bow# A tibble: 5 × 28

doc_id a academic and animals are cats crucial dogs elephants

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0 1 0 0 0 0 0 0 0

2 2 1 0 0 0 0 0 1 0 0

3 3 0 0 1 0 1 1 0 1 0

4 4 0 0 0 1 1 0 0 0 1

5 5 0 0 0 0 1 0 0 0 0

# ℹ 18 more variables: extraction <dbl>, feature <dbl>, important <dbl>,

# `in` <dbl>, is <dbl>, large <dbl>, live <dbl>, mammals <dbl>, mining <dbl>,

# ocean <dbl>, pets <dbl>, popular <dbl>, research <dbl>, step <dbl>,

# text <dbl>, that <dbl>, the <dbl>, whales <dbl>Text Mining Techniques

Feature Extraction

Term Frequency-Inverse Document Frequency (TF-IDF)

Weighting words \(W_{(t,d)}\) based on their importance within and across documents to see how the term t is original (or unique) is in a document d

\[ W_{(t,d)}=tf_{(t,d)} \times idf_{(t)} \] \[ W_{(t,d)}=tf_{(t,d)} \times log(\frac{N}{df_{t}}) \]

\(t\) : a term

\(d\) : a document

\(tf_{t,d}\) : frequency of term \(t\) (e.g. a word) in doc \(d\) (e.g. a sentence or an article)

\(df_{term}\) : # of documents containing the term

\(N\) : total number of documents

A high \(tf_{t,d}\) indicates that the term is highly significant within the document, while a high \(df_{t}\) suggests that the term is widely used across various documents (e.g., common verbs). Multiplying by \(idf_{t}\) helps to account for the term’s universality. Ultimately, tf-idf effectively captures a term’s uniqueness and importance, taking into consideration its prevalence across documents.

As an example,

# Calculate the TF-IDF scores

tf_idf <- tokens %>%

count(doc_id, word) %>%

bind_tf_idf(word, doc_id, n)

tf_idf# A tibble: 34 × 6

doc_id word n tf idf tf_idf

<int> <chr> <int> <dbl> <dbl> <dbl>

1 1 academic 1 0.143 1.61 0.230

2 1 important 1 0.143 1.61 0.230

3 1 in 1 0.143 0.511 0.0730

4 1 is 1 0.143 0.916 0.131

5 1 mining 1 0.143 0.916 0.131

6 1 research 1 0.143 1.61 0.230

7 1 text 1 0.143 0.916 0.131

8 2 a 1 0.111 1.61 0.179

9 2 crucial 1 0.111 1.61 0.179

10 2 extraction 1 0.111 1.61 0.179

# ℹ 24 more rows# Spread into a wide format

tf_idf_matrix <- tf_idf %>%

select(doc_id, word, tf_idf) %>%

spread(key = word, value = tf_idf, fill = 0)

tf_idf_matrix# A tibble: 5 × 28

doc_id a academic and animals are cats crucial dogs elephants

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0 0.230 0 0 0 0 0 0 0

2 2 0.179 0 0 0 0 0 0.179 0 0

3 3 0 0 0.268 0 0.0851 0.268 0 0.268 0

4 4 0 0 0 0.402 0.128 0 0 0 0.402

5 5 0 0 0 0 0.0639 0 0 0 0

# ℹ 18 more variables: extraction <dbl>, feature <dbl>, important <dbl>,

# `in` <dbl>, is <dbl>, large <dbl>, live <dbl>, mammals <dbl>, mining <dbl>,

# ocean <dbl>, pets <dbl>, popular <dbl>, research <dbl>, step <dbl>,

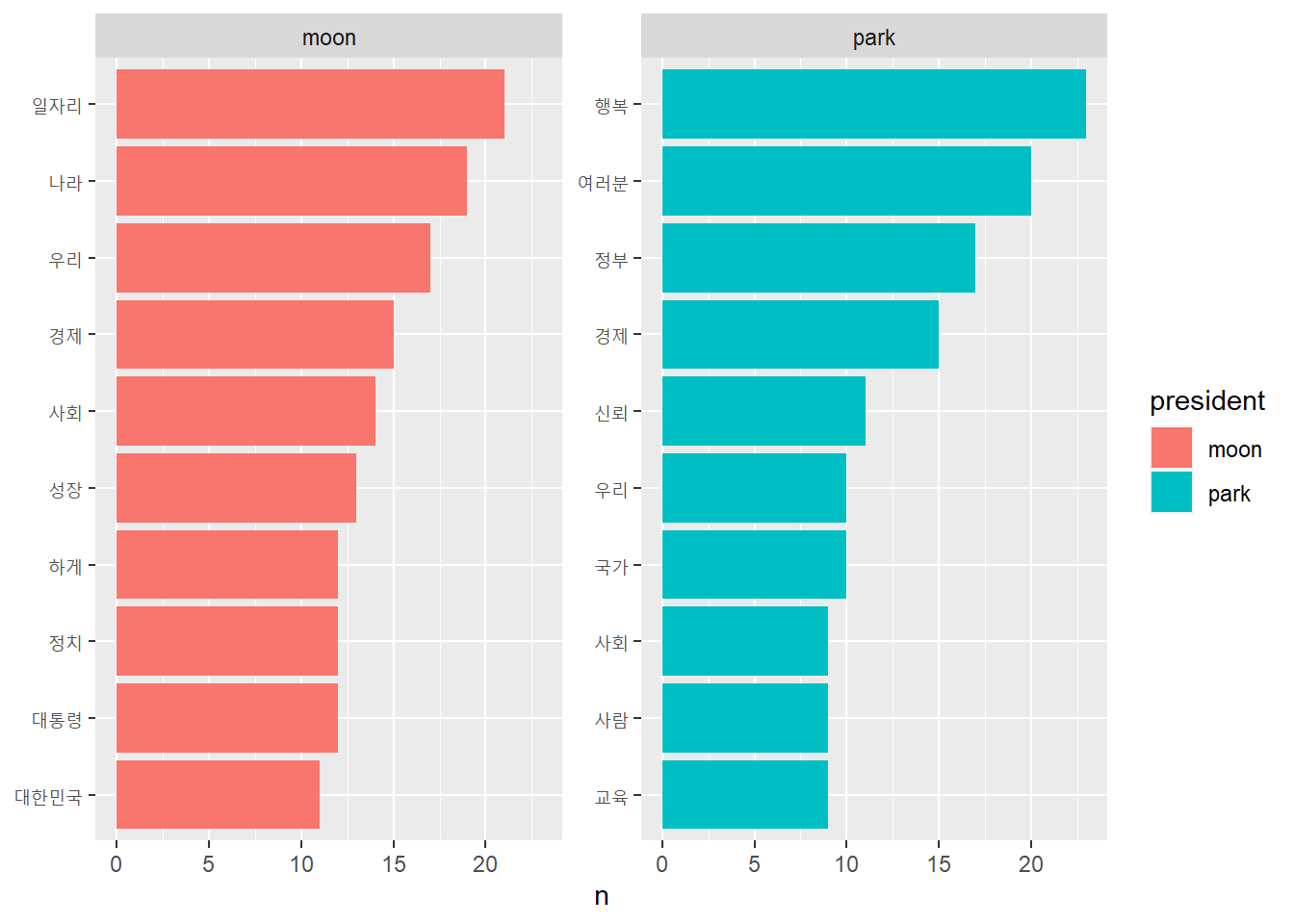

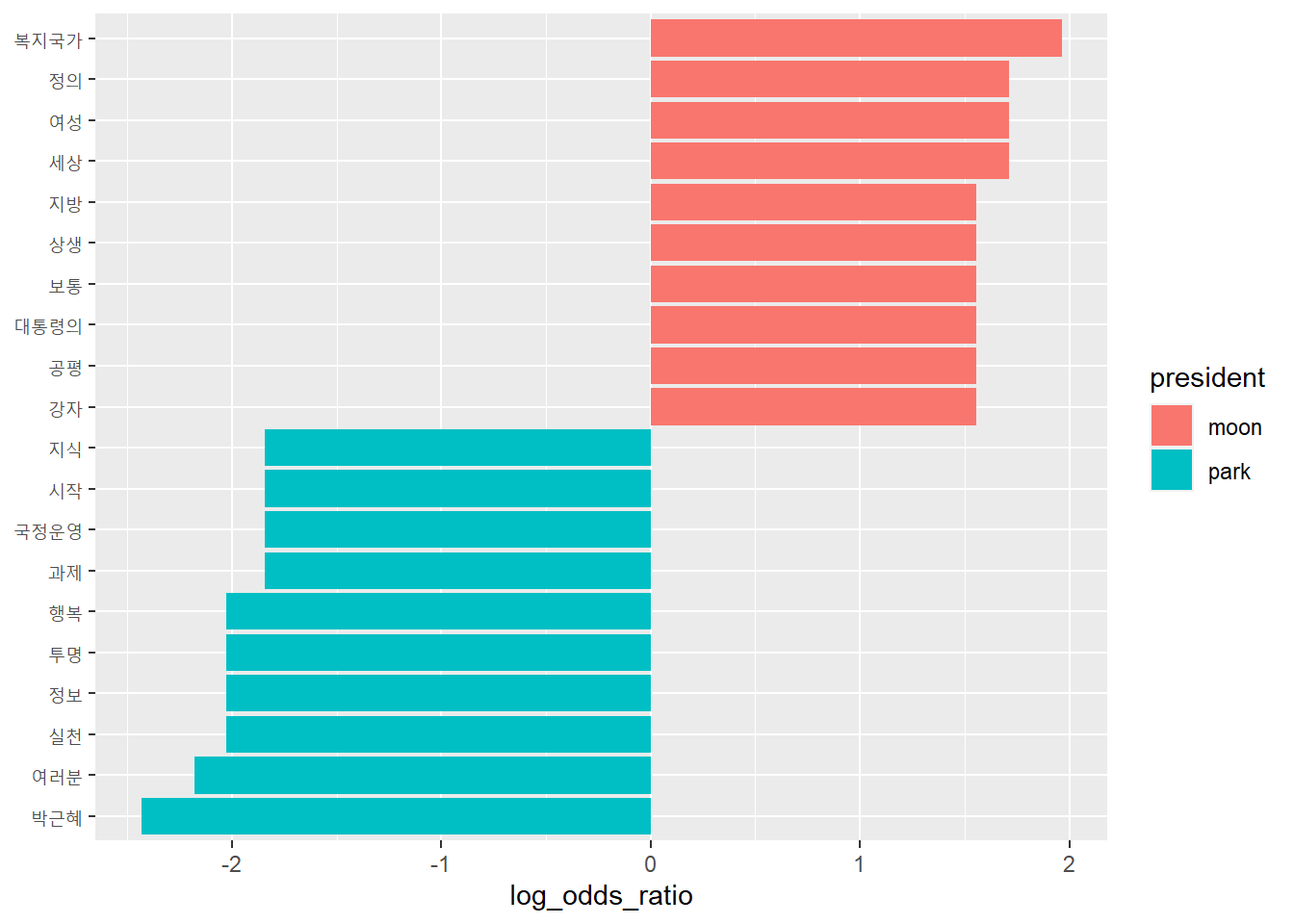

# text <dbl>, that <dbl>, the <dbl>, whales <dbl>Another example: Moon vs. Park speech

- Compare two speeches based on the just frequency of words

- Compare based on TF-IDF

Text Mining Techniques

Feature Extraction

Word Embeddings

Mapping words to continuous vector spaces based on their semantic relationships

Represents words as fixed-size vectors in continuous space

Captures semantic relationships and linguistic patterns between words

Common algorithms:

Word2Vec,GloVe,FastText→chatGPTPreserves semantic and syntactic properties in high-dimensional vector spaces

Words with similar meanings or usage patterns are closer in vector space

Applications: sentiment analysis, document classification, language translation, information retrieval

Text Mining Techniques

Text Classification

Naïve Bayes

Support Vector Machines

Neural Networks

Decision Trees

Bayes’ Theorem

Bayes’ theorem is a fundamental theorem in probability theory that describes the relationship between the conditional probabilities of two events (here, A and B). It states that the probability of event A given event B is equal to the probability of event B given event A multiplied by the probability of event A, divided by the probability of event B. Mathematically, this can be written as:

\[ P(A|B) = \frac{P(B|A)P(A)}{P(B)} \]

where:

\(P(A|B)\) is the probability of event A given event B (known as the posterior probability)

\(P(B|A)\) is the probability of event B given event A (known as the likelihood)

\(P(A)\) is the probability of event A (known as the prior probability)

\(P(B)\) is the probability of event B (known as the evidence)

The Naive Bayes Algorithm

The Naive Bayes algorithm uses Bayes’ theorem to predict the probability of each class label given a set of observed features. The algorithm assumes that the features are conditionally independent given the class label, which allows the algorithm to simplify the calculations involved in determining the probability of each class label.

Let \(X = (X_1, X_2, ..., X_n)\) represent the set of observed features, and let Y represent the class label. The goal is to predict the probability of each class label given X, i.e. \(P(Y|X)\). Using Bayes’ theorem, we can write:

\[ P(Y|X) = \frac{P(X|Y)P(Y)}{P(X)} \]

where:

\(P(Y|X)\) is the posterior probability of Y given X

\(P(X|Y)\) is the likelihood of X given Y

\(P(Y)\) is the prior probability of Y

\(P(X)\) is the evidence

The Naive Bayes algorithm assumes that the features \(X_1, X_2, ..., X_n\) are conditionally independent given Y, which means that:

\[ P(X|Y) = P(X_1|Y) \times P(X_2|Y) \times \ldots \times P(X_n|Y) \]

Using this assumption, we can rewrite the equation for \(P(Y|X)\) as:

\[ P(Y|X) = \frac{P(Y)P(X_1|Y)P(X_2|Y) \cdots P(X_n|Y)}{P(X)} \]

The evidence \(P(X)\) is a constant for a given set of features X, so we can ignore it for the purposes of classification. Therefore, we can simplify the equation to:

\[ P(Y|X) \propto P(Y) \times P(X_1|Y) \times P(X_2|Y) \times \ldots \times P(X_n|Y) \]

The Naive Bayes algorithm calculates the likelihoods \(P(X_i|Y)\) for each feature and class label from the training data, and uses these likelihoods to predict the probability of each class label given a new set of features. The algorithm selects the class label with the highest probability as the predicted class label.

- You’ve got mail like this

Hello Dear,

I’d like to offer the bes tchance to buy Viagra.

If you are interested …

The benefit you get is that …

Also want to suggest a good fund..

Amount of 100 MillionUS$ …

hope to hear from you.

Yours sincerely,

- Calculate probabilities \(P(Ham|Viagra)\) and \(P(Spam|Viagra)\) and Compare those two!

\[ P(Ham|Viagra) = \frac{P(Viagra|Ham)P(Ham)}{P(Viagra)} \] \[ P(Spam|Viagra) = \frac{P(Viagra|Spam)P(Spam)}{P(Viagra)} \]

Text Mining Techniques

Text Clustering

Partitional clustering method that assigns documents to a fixed number of clusters

Suitable for larger datasets

# A tibble: 5 × 28

doc_id a academic and animals are cats crucial dogs elephants

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0 0.230 0 0 0 0 0 0 0

2 2 0.179 0 0 0 0 0 0.179 0 0

3 3 0 0 0.268 0 0.0851 0.268 0 0.268 0

4 4 0 0 0 0.402 0.128 0 0 0 0.402

5 5 0 0 0 0 0.0639 0 0 0 0

# ℹ 18 more variables: extraction <dbl>, feature <dbl>, important <dbl>,

# `in` <dbl>, is <dbl>, large <dbl>, live <dbl>, mammals <dbl>, mining <dbl>,

# ocean <dbl>, pets <dbl>, popular <dbl>, research <dbl>, step <dbl>,

# text <dbl>, that <dbl>, the <dbl>, whales <dbl># K-means clustering

set.seed(42)

k <- 3 # Number of clusters

kmeans_model <- kmeans(tf_idf_matrix[, -1], centers = k)

clusters <- kmeans_model$cluster

# Assigning clusters to original data

text_df %>%

mutate(cluster = clusters)# A tibble: 5 × 3

doc_id text cluster

<int> <chr> <int>

1 1 Text mining is important in academic research. 1

2 2 Feature extraction is a crucial step in text mining. 1

3 3 Cats and dogs are popular pets. 2

4 4 Elephants are large animals. 3

5 5 Whales are mammals that live in the ocean. 1Agglomerative clustering method that builds a tree of clusters

Suitable for smaller datasets

# A tibble: 5 × 28

doc_id a academic and animals are cats crucial dogs elephants

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0 0.230 0 0 0 0 0 0 0

2 2 0.179 0 0 0 0 0 0.179 0 0

3 3 0 0 0.268 0 0.0851 0.268 0 0.268 0

4 4 0 0 0 0.402 0.128 0 0 0 0.402

5 5 0 0 0 0 0.0639 0 0 0 0

# ℹ 18 more variables: extraction <dbl>, feature <dbl>, important <dbl>,

# `in` <dbl>, is <dbl>, large <dbl>, live <dbl>, mammals <dbl>, mining <dbl>,

# ocean <dbl>, pets <dbl>, popular <dbl>, research <dbl>, step <dbl>,

# text <dbl>, that <dbl>, the <dbl>, whales <dbl># Hierarchical clustering

dist_matrix <- dist(tf_idf_matrix[, -1], method = "euclidean")

dist_matrix 1 2 3 4

2 0.5668202

3 0.7631048 0.7491481

4 0.8469394 0.8343861 0.9204641

5 0.6760124 0.6617837 0.7791871 0.8582969

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

-

대용량 문서자료 내에 잠재된 주제를 어떻게 파악할 수 있을까?

SNS: 사람들이 어떤 주제로 교류하고 있는지?

뉴스기사: 어떠한 내용들이 보도되고 있는지?

논문/특허: 어떠한 내용들이 연구개발되고 있는지?

제품/서비스리뷰: 고객들이 제품/서비스에 대해 어떠한 생각을 가지고 있는지?

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

-

대량의 문서자료의 분석에 많이 쓰이는 분석도구

- 문서 내 잠재되어있는 토픽(주제)를 식별함

-

토픽은 단어들 사이의 공동출현패턴을 기반으로 식별됨

함께 나타나는 경향이 짙은 단어들의 확률적 조합으로 ’토픽’을 식별

확률적 조합이기 때문에 한단어가 여러 토픽에 속할수 있음

-

한 단어가 여러 토픽(주제)에서 가지는 다양한 맥락적 의미를 분석할 수 있음

예) “타격”이라는 단어가 경제 기사와 스포츠 기사에서 가지는 의미?

예) “cell”이라는 단어가 생물학 논문과 연료전지 관련 논문에서 가지는 의미?

-

자료수집 범위에 따라 아주 세부적인 맥락 차이도 분석가능

- 예) 신기술 관련 문서 집합을 분석했을 때, 똑같이 “자동차”라는 단어가 비중있게 등장하지만 세부적인 맥락은 “전기”자동차와 “자율주행” 자동차로 나뉨

-

식별된 토픽을 구성하는 상위 단어들의 구성을 관찰한 후, 연구자가 해당 토픽이 무슨내용인지 유추

- 해석적 여지가 많은 탐색적 방법론

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

Text Mining Techniques

Text Clustering

Latent Dirichlet Allocation (LDA) (a.k.a. topic modeling)

Probabilistic topic modeling method that assigns documents to a mixture of topics

Suitable for discovering latent topics in text data

# A tibble: 34 × 2

doc_id word

<int> <chr>

1 1 text

2 1 mining

3 1 is

4 1 important

5 1 in

6 1 academic

7 1 research

8 2 feature

9 2 extraction

10 2 is

# ℹ 24 more rows<<DocumentTermMatrix (documents: 5, terms: 27)>>

Non-/sparse entries: 34/101

Sparsity : 75%

Maximal term length: 10

Weighting : term frequency (tf)# LDA topic modeling

k <- 2 # Number of topics

lda_model <- LDA(dtm, k = k, control = list(seed = 42))

gamma <- posterior(lda_model)$topics

# Assigning topics to original data

text_df %>%

mutate(topic = apply(gamma, 1, which.max))# A tibble: 5 × 3

doc_id text topic

<int> <chr> <int>

1 1 Text mining is important in academic research. 1

2 2 Feature extraction is a crucial step in text mining. 1

3 3 Cats and dogs are popular pets. 2

4 4 Elephants are large animals. 2

5 5 Whales are mammals that live in the ocean. 2Text Mining Techniques

Sentiment Analysis

Utilize pre-defined lists of words with associated sentiment scores

Calculate overall sentiment by aggregating scores of individual words

# A tibble: 34 × 2

doc_id word

<int> <chr>

1 1 text

2 1 mining

3 1 is

4 1 important

5 1 in

6 1 academic

7 1 research

8 2 feature

9 2 extraction

10 2 is

# ℹ 24 more rows# Sentiment analysis using NRC lexicon

sentiments_nrc <- tokens %>%

inner_join(get_sentiments("nrc"), by = "word")

sentiments_nrc# A tibble: 7 × 3

doc_id word sentiment

<int> <chr> <chr>

1 1 important positive

2 1 important trust

3 1 academic positive

4 1 academic trust

5 2 feature positive

6 2 crucial positive

7 2 crucial trust sentiments_nrc %>%

group_by(doc_id, sentiment) %>%

count(sentiment) %>%

ggplot(aes(x = as.factor(doc_id),

y = n, fill = sentiment)) +

geom_bar(stat = 'identity',

position = 'fill') +

labs(x = "Document", y = NULL) +

theme_bw()

Train a supervised machine learning model on labeled sentiment data

Apply the trained model to predict sentiment of new text

# A tibble: 5 × 28

doc_id a academic and animals are cats crucial dogs elephants

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0 0.230 0 0 0 0 0 0 0

2 2 0.179 0 0 0 0 0 0.179 0 0

3 3 0 0 0.268 0 0.0851 0.268 0 0.268 0

4 4 0 0 0 0.402 0.128 0 0 0 0.402

5 5 0 0 0 0 0.0639 0 0 0 0

# ℹ 18 more variables: extraction <dbl>, feature <dbl>, important <dbl>,

# `in` <dbl>, is <dbl>, large <dbl>, live <dbl>, mammals <dbl>, mining <dbl>,

# ocean <dbl>, pets <dbl>, popular <dbl>, research <dbl>, step <dbl>,

# text <dbl>, that <dbl>, the <dbl>, whales <dbl># Train a Random Forest model

train_data <- tf_idf_matrix %>%

left_join(sentiments_nrc, by = "doc_id") %>%

relocate(sentiment, .after = doc_id) %>%

drop_na %>%

select(-doc_id)

train_data# A tibble: 7 × 29

sentiment a academic and animals are cats crucial dogs elephants

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 positive 0 0.230 0 0 0 0 0 0 0

2 trust 0 0.230 0 0 0 0 0 0 0

3 positive 0 0.230 0 0 0 0 0 0 0

4 trust 0 0.230 0 0 0 0 0 0 0

5 positive 0.179 0 0 0 0 0 0.179 0 0

6 positive 0.179 0 0 0 0 0 0.179 0 0

7 trust 0.179 0 0 0 0 0 0.179 0 0

# ℹ 19 more variables: extraction <dbl>, feature <dbl>, important <dbl>,

# `in` <dbl>, is <dbl>, large <dbl>, live <dbl>, mammals <dbl>, mining <dbl>,

# ocean <dbl>, pets <dbl>, popular <dbl>, research <dbl>, step <dbl>,

# text <dbl>, that <dbl>, the <dbl>, whales <dbl>, word <chr>model_rf <- rpart("sentiment ~ a + academic + crucial",

data = train_data)

# Predict sentiment for new text

predictions <- predict(model_rf, newdata = tf_idf_matrix)

predictions positive trust

[1,] 0.5714286 0.4285714

[2,] 0.5714286 0.4285714

[3,] 0.5714286 0.4285714

[4,] 0.5714286 0.4285714

[5,] 0.5714286 0.4285714Train a deep learning model, such as a Recurrent Neural Network (RNN) or Transformer, on labeled sentiment data

Apply the trained model to predict sentiment of new text

Text Mining Techniques

Named Entity Recognition

Utilize predefined patterns and rules to identify entities in text

Examples include regular expressions and dictionary lookups

# Example data

text_df <- data.frame(

doc_id = 1:3,

text = c(

"John works at Google.",

"Alice is employed by Microsoft.",

"Bob is a researcher at OpenAI."

),

stringsAsFactors = FALSE

)

text_df doc_id text

1 1 John works at Google.

2 2 Alice is employed by Microsoft.

3 3 Bob is a researcher at OpenAI.# Define a simple regular expression pattern for person-organization relations

relation_pattern <- "([A-Z][a-z]+) (?:works at|is employed by|is a researcher at) ([A-Z][A-Za-z]+)"

# Extract relations from text

str_match_all(text_df$text, relation_pattern) %>%

lapply(function(matches) {

if (nrow(matches) > 0) {

return(data.frame(

person = matches[, 2],

organization = matches[, 3],

stringsAsFactors = FALSE

))

} else {

return(NULL)

}

}) %>% do.call(rbind,.) person organization

1 John Google

2 Alice Microsoft

3 Bob OpenAITrain a supervised machine learning model on labeled entity data

Apply the trained model to recognize entities in new text

Train a deep learning model, such as a BiLSTM-CRF or Transformer, on labeled entity data

Apply the trained model to recognize entities in new text

Text Mining Techniques

Relation Extraction

Keyword network analysis is grounded in social network analysis (SNA) and graph theory.

SNA allows researchers to study relationships, interactions, and connections between entities (in this case, keywords).

Graph theory provides a mathematical framework to analyze and visualize complex networks, allowing for a better understanding of the structure and organization of the data.

Identify key topics and themes: Keyword network analysis can help researchers identify the most important keywords or concepts in a given text dataset, providing insight into the main topics and themes.

Uncover hidden relationships: By visualizing and analyzing the connections between keywords, researchers can discover hidden relationships and patterns in the data that might not be apparent through traditional text analysis methods.

Facilitate interdisciplinary research: Keyword network analysis can reveal connections between seemingly unrelated research areas, facilitating interdisciplinary collaboration and knowledge transfer.

Inform research design and sampling: By identifying the most influential keywords or topics in a dataset, researchers can target their efforts on specific areas of interest, enabling more efficient research design and sampling strategies.

Support hypothesis generation and validation: The visualization and analysis of keyword networks can help researchers generate and validate hypotheses about the relationships between different concepts, leading to a deeper understanding of the underlying phenomena.

Text Mining Techniques

Relation Extraction

- A. Preprocessing and extracting keywords

- B. Co-occurrence matrix and network

- C. Analyzing keyword network

Tokenization

Stop word removal

Stemming or lemmatization (optional)

Sample text: 6 sentences

Text mining and data mining are essential techniques in data science, and they help analyze large amounts of textual data. Machine learning, natural language processing, and deep learning are crucial techniques for text mining and data analysis. Text mining can reveal insights in large collections of documents, uncovering hidden patterns and trends. Big data analytics involves data mining, machine learning, text mining, and statistical analysis. Sentiment analysis is a popular application of text mining, natural language processing, and machine learning, often used for social media analytics. Data visualization plays a significant role in understanding patterns and trends in data mining results, making complex information more accessible.

# Example dataset

text_data <- tibble(

doc_id = 1:6,

text = c("Text mining and data mining are essential techniques in data science, and they help analyze large amounts of textual data.",

"Machine learning, natural language processing, and deep learning are crucial techniques for text mining and data analysis.",

"Text mining can reveal insights in large collections of documents, uncovering hidden patterns and trends.",

"Big data analytics involves data mining, machine learning, text mining, and statistical analysis.",

"Sentiment analysis is a popular application of text mining, natural language processing, and machine learning, often used for social media analytics.",

"Data visualization plays a significant role in understanding patterns and trends in data mining results, making complex information more accessible.")

)

text_data# A tibble: 6 × 2

doc_id text

<int> <chr>

1 1 Text mining and data mining are essential techniques in data science, …

2 2 Machine learning, natural language processing, and deep learning are c…

3 3 Text mining can reveal insights in large collections of documents, unc…

4 4 Big data analytics involves data mining, machine learning, text mining…

5 5 Sentiment analysis is a popular application of text mining, natural la…

6 6 Data visualization plays a significant role in understanding patterns …# Tokenization

tokens <- text_data %>%

unnest_tokens(word, text) %>%

anti_join(stop_words) %>%

group_by(doc_id) %>%

count(word, sort = TRUE)

tokens# A tibble: 67 × 3

# Groups: doc_id [6]

doc_id word n

<int> <chr> <int>

1 1 data 3

2 1 mining 2

3 2 learning 2

4 4 data 2

5 4 mining 2

6 6 data 2

7 1 amounts 1

8 1 analyze 1

9 1 essential 1

10 1 science 1

# ℹ 57 more rowsCalculate word co-occurrence matrix

Convert co-occurrence matrix to a graph object

Visualize the keyword network

# Calculate co-occurrence matrix

co_occurrence_matrix <- tokens %>%

ungroup() %>%

pairwise_count(word, doc_id, sort = TRUE)

co_occurrence_matrix# A tibble: 586 × 3

item1 item2 n

<chr> <chr> <dbl>

1 text mining 5

2 mining text 5

3 mining data 4

4 data mining 4

5 text data 3

6 learning mining 3

7 analysis mining 3

8 machine mining 3

9 mining learning 3

10 text learning 3

# ℹ 576 more rows# Filter edges and create graph object

filtered_edges <- co_occurrence_matrix %>%

filter(n >= 2)

keyword_network <- graph_from_data_frame(filtered_edges)

keyword_networkIGRAPH 0350ef0 DN-- 13 88 --

+ attr: name (v/c), n (e/n)

+ edges from 0350ef0 (vertex names):

[1] text ->mining mining ->text mining ->data

[4] data ->mining text ->data learning ->mining

[7] analysis ->mining machine ->mining mining ->learning

[10] text ->learning analysis ->learning machine ->learning

[13] data ->text learning ->text analysis ->text

[16] machine ->text mining ->analysis learning ->analysis

[19] text ->analysis machine ->analysis mining ->machine

[22] learning ->machine text ->machine analysis ->machine

+ ... omitted several edges# Visualize the keyword network

ggraph(keyword_network, layout = "fr") +

geom_edge_link(aes(edge_alpha = n), show.legend = FALSE) +

geom_node_point(color = "blue", size = 5) +

geom_node_text(aes(label = name), vjust = 1, hjust = 1, size = 10) +

theme_graph(base_family = "Arial") +

labs(title = "Keyword Co-occurrence Network")

To understand the graph better, let’s examine some of the connections:

text -> mining: The keyword ‘text’ is connected to the keyword ‘mining’. This edge represents that ‘text’ and ‘mining’ co-occur in the dataset, forming the term ‘text mining’.mining -> data: The keyword ‘mining’ is connected to the keyword ‘data’, indicating that these two terms appear together, forming the term ‘data mining’.learning -> machine: The keyword ‘learning’ is connected to the keyword ‘machine’, representing the co-occurrence of these two terms, forming ‘machine learning’.analysis -> text: The keyword ‘analysis’ is connected to the keyword ‘text’, suggesting that these two terms co-occur in the dataset, forming the term ‘text analysis’.

From this graph, you can observe the following:

Keywords related to data analysis techniques, such as ‘text mining’, ‘data mining’, ‘machine learning’, and ‘analysis’, are strongly connected, indicating that they are frequently discussed together in the dataset.

The term ‘text mining’ is connected to ‘data mining’, ‘machine learning’, and ‘analysis’, suggesting that these techniques are closely related and are often mentioned in the context of text analysis.

The term ‘machine learning’ is connected to ‘text mining’, ‘data mining’, and ‘analysis’, highlighting its importance and relevance to different data analysis techniques.

Degree centrality: Number of connections for each nodeBetweenness centrality: Importance of a node as a connector in the networkCommunity detection: Clustering of nodes in the network

# Degree centrality

degree_centrality <- degree(keyword_network)

# Betweenness centrality

betweenness_centrality <- betweenness(keyword_network)

tibble(node = names(degree_centrality),

degree_centrality = degree(keyword_network)) %>%

left_join(

tibble(node = names(degree_centrality),

betweenness_centrality = betweenness(keyword_network))

) -> node_data

node_data# A tibble: 13 × 3

node degree_centrality betweenness_centrality

<chr> <dbl> <dbl>

1 text 20 8.8

2 mining 24 48.8

3 data 12 2

4 learning 18 2.8

5 analysis 18 2.8

6 machine 18 2.8

7 techniques 6 0

8 language 14 0

9 natural 14 0

10 processing 14 0

11 patterns 4 0

12 trends 4 0

13 analytics 10 0 # Degree - Btw mat

node_data %>%

ggplot(aes(x = degree_centrality,

y = betweenness_centrality)) +

geom_text_repel(aes(label = node), size = 10)

# Degree - Btw mat (2)

node_data %>%

filter(betweenness_centrality < 7) %>%

ggplot(aes(x = degree_centrality,

y = betweenness_centrality)) +

geom_text_repel(aes(label = node), size = 10)

# Community detection using Louvain method

# Convert the directed graph to an undirected graph

undirected_keyword_network <- as.undirected(keyword_network, mode = "collapse")

# Perform community detection using the Louvain algorithm

louvain_communities <- cluster_louvain(undirected_keyword_network)

# Print the community assignments

print(louvain_communities)IGRAPH clustering multi level, groups: 3, mod: 0.1

+ groups:

$`1`

[1] "text" "data" "techniques"

$`2`

[1] "mining" "patterns" "trends"

$`3`

[1] "learning" "analysis" "machine" "language" "natural"

[6] "processing" "analytics"

# Visualize the keyword network with community colors

ggraph(keyword_network) +

geom_edge_link(aes(width = n), alpha = 0.5) +

geom_node_point(aes(size = degree_centrality,

col = louvain_communities_char)) +

geom_node_text(aes(label = name, color = louvain_communities_char),

vjust = 1.5, hjust = 1.5,

size = 10) +

scale_color_discrete(name = "Community") + # add a legend for community colors

theme_graph(base_family = "Arial") +

labs(title = "Keyword Network with Community Detection")

Applications in Academic Research

Literature Reviews and Meta-Analyses:

Summarization of articles (Keywords and Topics): Text mining techniques can extract keywords and main topics from research articles, allowing researchers to quickly understand the content and focus of a given paper.

Meta-analysis: By aggregating and analyzing findings from multiple research studies, text mining can help researchers draw more reliable conclusions and identify patterns and trends across a body of literature.

Identifying research trends and gaps: Text mining can help researchers identify emerging research trends, patterns, and potential gaps in the literature by analyzing large collections of articles and tracking the frequency of specific terms, phrases, and topics over time.

Analyzing online academic discussions: Researchers can use text mining to analyze discussions from academic forums, social media, and other online platforms, providing insights into prevailing opinions, questions, and debates within the research community.

Citation network analysis: Text mining can be used to create citation networks, which help researchers visualize the relationships between publications, authors, and research topics, enabling them to identify influential works and collaboration patterns in their field.

Applications in Academic Research

Social Media Analysis for Public Opinion on Academic Topics:

Trend, Keywords, and topic analysis: Text mining can be used to analyze social media content and identify popular trends, keywords, and topics related to academic research, giving researchers insights into public interest and awareness of their research area.

Information flow: By tracking the spread of information on social media platforms, researchers can understand how academic research findings are disseminated and shared among different communities, helping them develop strategies to improve communication and outreach.

Sentiment analysis: Text mining can be used to analyze the sentiment of social media posts and comments related to academic research, providing researchers with insights into public perceptions and opinions about specific research topics or findings.

Network analysis (among actors, keywords, etc.): Researchers can use text mining to analyze the relationships among different actors (e.g., individuals, organizations, or countries) and keywords within social media networks, helping them identify key influencers, collaboration opportunities, and areas of common interest. This analysis can provide valuable insights into the broader impact and reach of their research within various communities.

Applications in Academic Research

Applications in Academic Research

Applications in Academic Research

Challenges and Limitations

A. Handling unstructured and noisy data:

Heterogeneous formats: Academic research articles and texts come in various formats, such as PDFs, Word documents, and HTML. Text mining techniques need to handle and convert these diverse formats into structured data for analysis.

Data cleaning: Raw text data usually contain typos, grammatical errors, and inconsistencies that can affect the accuracy of the analysis. Text mining techniques must be robust enough to address these issues and clean the data.

Ambiguity: Text data often contain ambiguous terms, phrases, or sentences that can have multiple interpretations. Identifying and resolving these ambiguities is a significant challenge in text mining.

Challenges and Limitations

B. Addressing language and domain-specific challenges:

Multilingual texts: Academic research is published in multiple languages, requiring text mining techniques to be adaptable to different languages and character sets.

Domain-specific jargon: Each research domain has its terminology and phrases that may not be easily understood by generic text mining tools. Developing techniques that can understand and analyze these domain-specific terms is crucial.

Context-dependent meaning: The meaning of a term or phrase may vary depending on the context it is used in. Text mining techniques need to be able to accurately capture and understand these context-dependent meanings.

Challenges and Limitations

C. Ethical and legal considerations:

Data privacy: Text mining may involve processing sensitive information, such as personal or proprietary data. Researchers must ensure that they adhere to data privacy regulations and protect the confidentiality of the data.

Copyright and intellectual property: Text mining techniques may involve the use of copyrighted material. It is essential to understand and comply with copyright laws and obtain the necessary permissions for using such content.

Bias and fairness: Text mining algorithms can inadvertently perpetuate biases present in the training data. Researchers should be aware of potential biases and develop strategies to mitigate their impact on the analysis.

Challenges and Limitations

D. Scalability and computational complexity:

Large datasets: Academic research data can be vast, and text mining techniques need to be able to efficiently handle and process these large datasets.

High dimensionality: Text data is inherently high-dimensional due to the vast number of unique words and phrases. Techniques must be able to manage this high dimensionality to avoid the “curse of dimensionality” and maintain computational efficiency.

Real-time processing: In some cases, text mining applications may require real-time analysis and processing of text data. Developing techniques that can handle real-time processing demands while maintaining accuracy is a significant challenge.

Conclusion and Future Directions

A. The growing importance of text mining in academic research:

Expanding data sources: With the exponential growth of digital content and the increasing accessibility of various data sources, text mining will continue to play a pivotal role in extracting valuable information and insights from large volumes of unstructured data.

Facilitating knowledge discovery: Text mining techniques enable researchers to identify patterns, trends, and relationships within and across research domains that may not be evident through traditional research methods.

Enhancing research efficiency: By automating data analysis and processing tasks, text mining can save researchers time and resources, allowing them to focus on more in-depth analysis and interpretation of results.

Conclusion and Future Directions

B. Continued development of new techniques and methodologies:

Machine learning and artificial intelligence: The integration of machine learning and artificial intelligence methods into text mining will lead to more sophisticated and accurate analysis techniques, capable of handling complex and ambiguous language structures.

Natural language processing advancements: Ongoing improvements in natural language processing will enable more accurate understanding and interpretation of human language in text data, leading to better results in text mining tasks.

Domain-specific tools: The development of specialized text mining tools tailored to specific research domains will facilitate more accurate and efficient analysis of domain-specific jargon and concepts.

Conclusion and Future Directions

C. Encouraging interdisciplinary collaboration and research:

Cross-disciplinary partnerships: Collaborations between computer scientists, linguists, and domain experts will foster the development of novel techniques and approaches that address the unique challenges of text mining in specific research areas.

Shared resources and infrastructure: Establishing shared platforms, datasets, and software tools will encourage researchers from different disciplines to explore text mining applications, promoting innovation and knowledge exchange.

Training and education: Providing training and educational opportunities in text mining will help researchers across disciplines develop the skills needed to apply text mining techniques effectively in their research.

Conclusion and Future Directions

D. Addressing challenges and limitations for more robust applications:

Tackling unstructured and noisy data: Developing techniques to better handle, clean, and preprocess unstructured and noisy data will improve the accuracy and reliability of text mining applications in academic research.

Addressing ethical and legal concerns: Researchers must continue to engage in discussions and develop guidelines to address data privacy, copyright, and intellectual property issues related to text mining.

Improving scalability and computational efficiency: As the volume of data continues to grow, researchers need to develop more scalable and computationally efficient text mining techniques to handle large-scale datasets and real-time processing demands.